DATV in simple terms - Part 3

This article was first published in CQ-TV magazine, issue 210.

So far we have looked at how a signal is converted to and from a stream of digits and how the digital representation of a picture can easily be manipulated. In this part, we will look at the methods used to lessen the burden of handling such vast quantities of digits. The technique of reducing the amount of data is called compression and it is something we are all familiar with, even if we haven’t realised it.

Imagine this scene: “a golden sandy beach stretches into the distance, to the right are rows of palm trees gently sway in the breeze, the left a deep blue sea with waves gently lapping the shore. Overhead, the sky is clear and blue, you hear the distant laughing from children playing at the waters edge.”

OK, that’s the end of my description, I think you see in your minds eye a tropical paradise. If that was a video clip, lasting maybe 10 seconds, it would need about 200,000,000 bytes of storage, my description did it in 283 bytes! That’s compression for you. The trouble of course is that each of you saw a slightly different picture although (hopefully) the theme was the same. Video compression is also like that, some of the picture is taken away so you don’t have to transmit or store it, but not enough that anyone really notices. I know the purist among you are now crying because to the trained eye, the compression is obvious but to the masses, it isn’t apparent at all. The trick is to find a suitable compromise between quality and quantity and the balance of the two is to a degree dependent upon the picture content.

Let’s look at the methods used to save space. There are two methods of compressing data, one is called ‘lossless’ compression, the other ‘lossy’ compression. Lossless is just that, all the information in the picture is retained but by cleverly rearranging the data it is packed into a smaller space. Lossy compression does the same but by making assumptions about human eyesight and by analysing the picture, frame by frame, it also completely discards some of the information. What is left is obviously smaller than the original. For a fixed amount of data, the difference in original size, and the size achieved by each compression method can be huge. Lossless may give a reduction of 30-40% while lossy can reach 80-90% in extreme cases, visually both showing similar end results.

So how does it work? Looking at lossless first, a typical stream of digital picture information, as hexadecimal bytes might be: 10 10 10 10 10 10 10 10 11 11 32 45 96 A6 C5 C5 C5 C5 C5 C5 D6 EF 30 32 I know this isn’t in itself very meaningful but the sequence should serve to show how it can be made smaller. You will notice that although there are several different numbers, there are also some repeating patterns. Lossless compression works by looking for repeats and instead of storing each occurrence of the number, it substitutes a reserved number, sometimes called a ‘sentinel byte’ followed by one copy of the number and a repeat count. So our original sequence of eight 10s might become FF 10 08 where FF is the sentinel, 10 is the actual number and 08 is the number of times it is repeated. Already, eight bytes are squashed down to three. When uncompressing, when the sentinel is found, it and the count are removed and instead, the following number is repeated eight times, taking us back where we started. Of course there has to be provision made for those cases where the data is the same value as the sentinel and could be mistaken for it. In a real video stream, there tends to be long repeats of the same number, for example the black borders around the picture and in the sync intervals. Most compression algorithms also take into account that the most common recurring numbers may change from scene to scene and adapt so the ones with most repeats are given the compression treatment. They also look for repeating sequences of different numbers, for example 10 11 10 11 10 11 10 11 may be recorded as four occurrences of 10 11.

Lossy compression also uses the same method of detecting and counting repeats but takes a step further. In its simplest form it treats each frame of video as an individual image and looks for whole areas that are identical or very near matches. Imagine the picture has a grid of lines across and down it, dividing it into square areas. If more than one square holds the same or similar picture, only the first is used and a duplicate is put in the second and possibly subsequent positions. Obviously, the size of the squares is important, too big and the likelihood of matching ones being found becomes unlikely, too small and the benefit of chopping the picture this way is diminished. This technique is used in still digital camera images and widely used on Internet images because being applied frame by frame makes it suitable for any motionless image. Indeed, most of the images in CQ-TV are stored this way to keep the magazine masters down to a workable size. On photographs, this image compression is referred to as JPEG (Joint Photographic Experts Group) and when used on frames of video is called MJPEG or Motion JPG. Essentially when viewed as a television picture, you actually see a rapid succession of JPEG compressed stills.

Far more commonly used in video work is a similar system called MPEG (Motion Picture Experts Group) which as well as working on a single still frame, take into account that parts of images sometimes stay the same from one frame to the next. This is called ‘temporal’ (meaning ‘time’) compression whereas the JPEG uses only ‘spatial’ (meaning ‘within a space’). This is nothing to do with Doctor Who and his Tardis, it simply is a way of looking at what changes in time as well as what changes in position. Go back to the grid arrangement mentioned earlier, if the grid was overlayed on several adjacent frames of video, the chances are that not only would some squares be the same within a single frame but they would also be in the same place in the following frame. Take for example a newsreader in the television studio, typically they would have a fixed camera on them so their backdrop would remain in the same position from one frame to the next. Only the newsreaders head would be in motion. From a compression point of view, a portion of the picture changes (the head) but most of it is static. To reduce the amount of data, the parts that remain in the same place are not stored or transmitted, only the moving parts are. This has a dramatic effect on the amount of data as instead of sending a whole picture, we only have to send updates of the parts that have changed

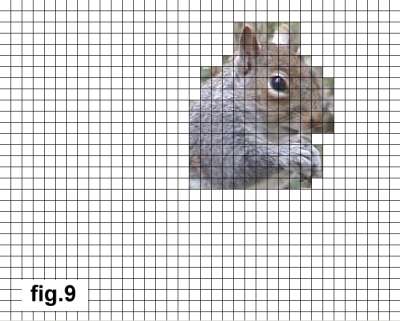

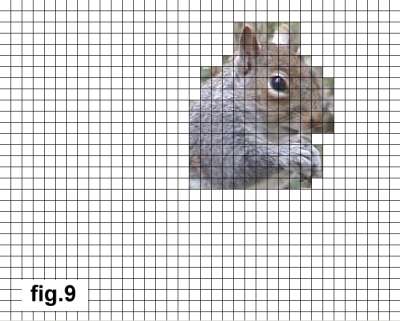

Look at fig.7 and fig. 8 which are actually adjacent frames from a video production, fig. 9 shows the difference between them which is all that would need to be sent to update the first picture to the second. In fact on a motionless picture it is theoretically possible to send just the first frame and an instruction to keep repeating it. In practise, this isn’t such a good idea though because in most scenes there are very minor variations in lighting and movement and if we just keep repeating the same data it quickly mounts up to an appreciable difference to the real picture. There is also a potential problem when a complete scene change occurs and all the squares in the previous frame suddenly become out of date and have to be renewed. To get around these problems, the image is treated in two ways, either it is a whole frame of information or it is an update frame that modifies the last image. Periodically, every few frames a new full frame is sent anyway so the image never becomes ‘stale’. If a scene change occurs, a new full frame is also sent, even if it isn’t due as part of the periodic refresh yet. This compression is called MPEG-1 but there are other variations, notably MPEG-2 which takes the concept of only updating parts where necessary one step further. With this method as well as looking at which regions differ from one frame to the next, it takes into account that many frames contain the same image but with parts of it moved to somewhere else in the picture. For example if the camera panned to the left, each frame would contain the same background detail but displaced slightly from its position in the previous frame.

Instead of looking only at the same grid square in adjacent frames, MPEG-2 looks at neighbouring squares to see if the contents of the first frame have now be repositioned in a new grid square on the second. This takes much more processing power to achieve as not only is the frame by frame comparison being made on the same grid square but also on all its adjacent squares. The outcome of both methods is the same though, as there is no need to convey the data that makes up the detail within the square, only its position, the amount of data is greatly reduced. The MPEG-2 system is used by terrestrial digital television and by almost all satellite broadcasters. It is also used in Europe and most of the World to compress video content for DVD products although in some Asian countries, MPEG-1 is the preferred standard. A less common compression is called MPEG-4 which is similar in operation to MPEG-2 but uses a more efficient lossy compression technique.

Incidentally, MPEG-3 does not exist, the sound compression from MPEG-2, being very efficient, was ‘hi-jacked’ for use in music recording and christened MP3 so although the name MPEG-3 would have been the obvious choice, it was avoided to save confusion with MP3.

Going back to electronics, MJPEG and MPEG are complicated to implement and are best left to the custom chip companies to develop. The cost is not great though as most of the expense is in the design and production of chip masks. When in production, the real cost per device is pennies. Uncompressing MPEG is much easier than would first appear as reconstructing the image is simply a case of taking the incoming data, decompressing it as described under ‘lossy’ above then storing the data in a memory device. This memory is then read out through a DAC to reproduce the video signal. If a full frame video is received, it fully fills the memory, if an update frame is received, only the applicable part is updated. Being a memory device, the updated region will replace what was originally there so only that part of the picture is changed, the rest remains as it was in the previous frame. The complicated part is compressing the video in the first place. In order to look for changes from one frame to the next and particularly, if motion detection is being used as well, as in MPEG-2, it is necessary to hold several frames in a queue. The algorithm can then look back and forth in the queue to see what is can be left alone and what needs updating. Quite apart from the enormous amount of computing power this needs, it creates a delay of several frame periods while they are being held for analysis. If you have consumer digital TV and analogue TV showing the same program material, it is interesting to look at the two side by side. There will typically be a delay of about half a second with the digital picture lagging the analogue one while the compressing and decompressing take place